Research Overview

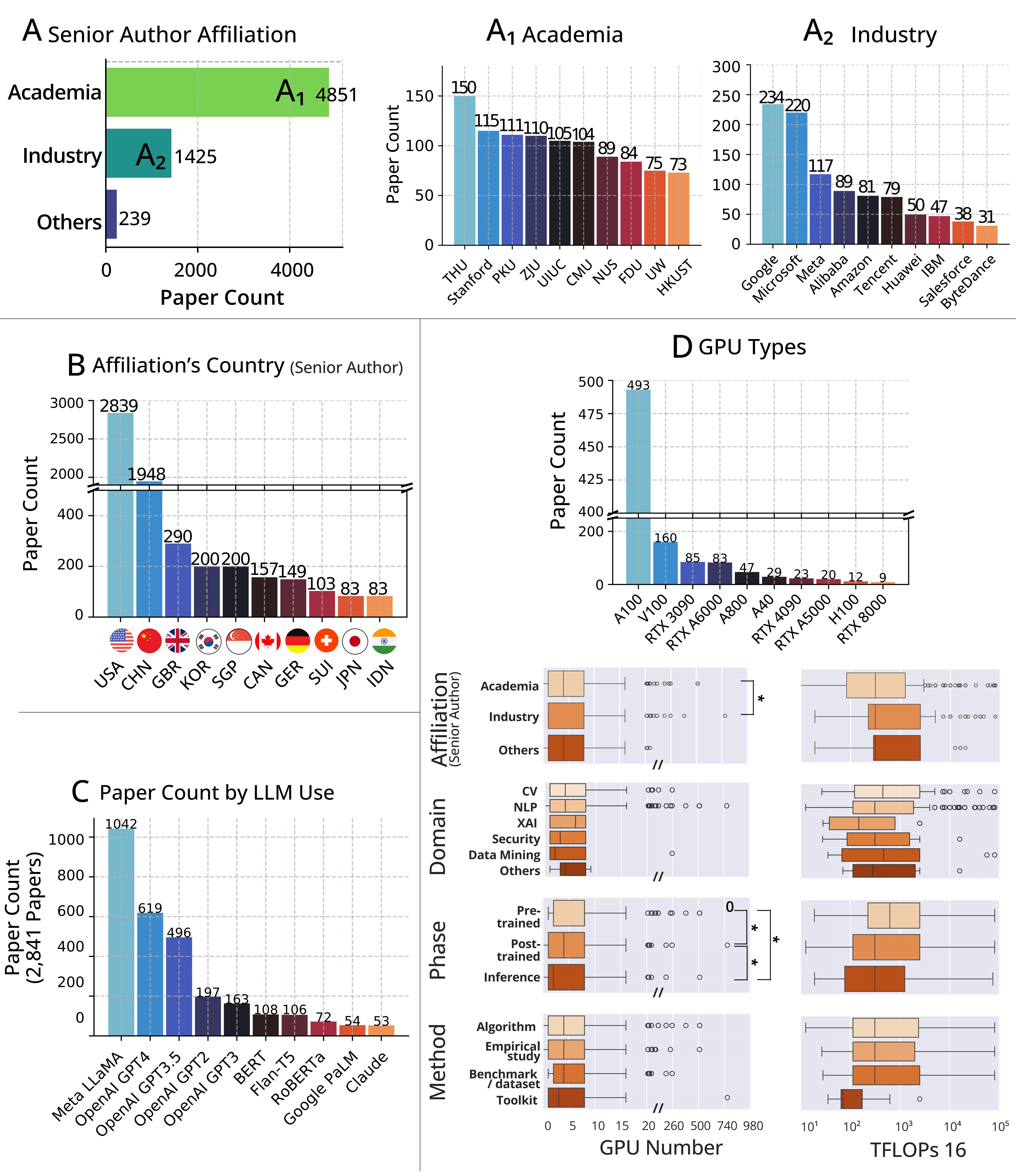

Cutting-edge research in Artificial Intelligence (AI) requires considerable resources, including Graphics Processing Units (GPUs), data, and human resources. In this paper, we evaluate the relationship between these resources and the scientific advancement of foundation models (FM). We reviewed 6,517 FM papers published between 2022–2024 and surveyed 229 first-authors to understand the impact of computing resources on scientific output. We find that increased computing is correlated with individual paper acceptance rates and national funding allocations, but is not correlated with research environment (academic or industrial), domain, or study methodology.

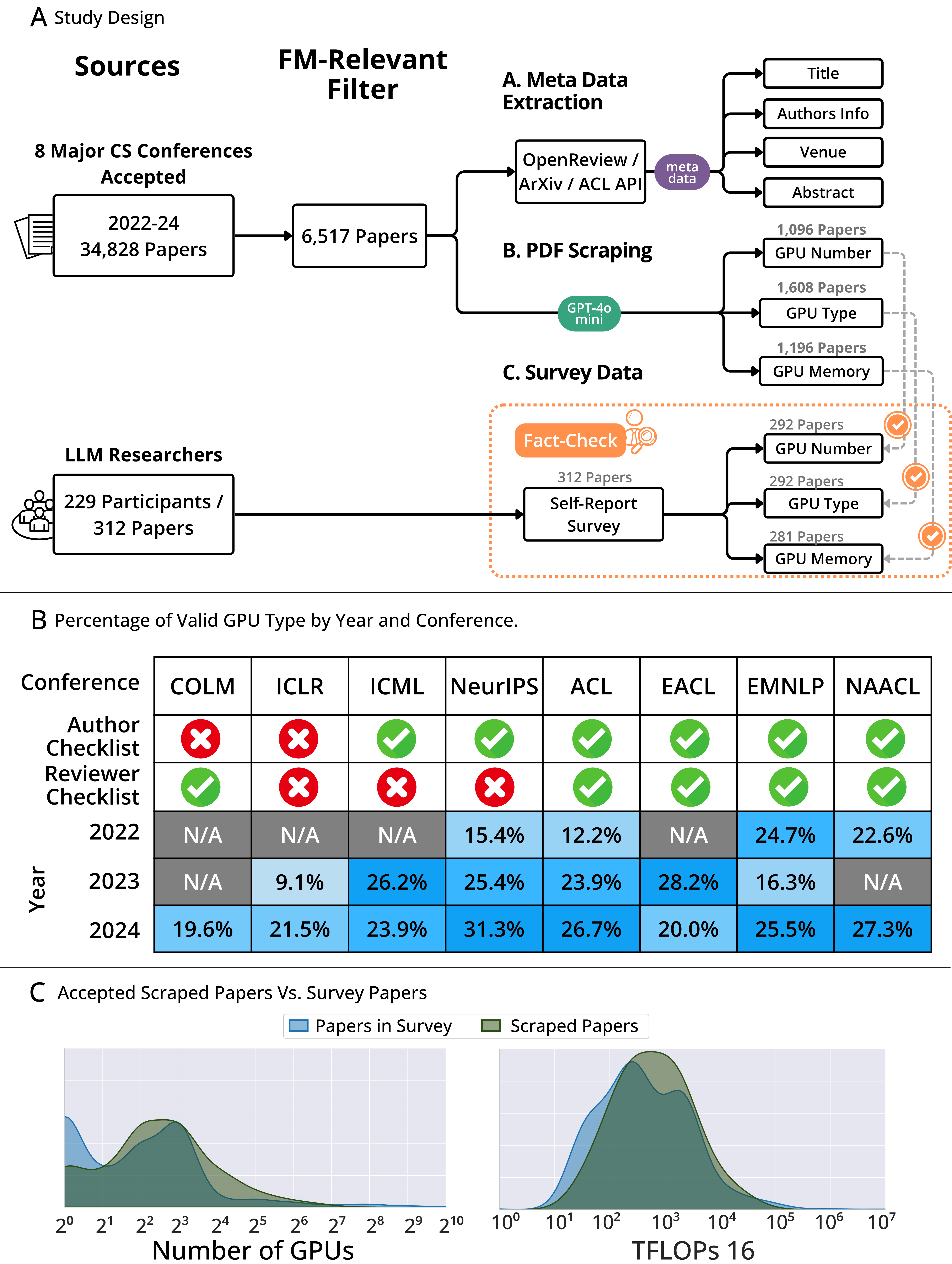

We analyze 34,828 accepted papers between 2022 and 2024, identifying 5,889 FM papers, and examine GPU access and TFLOPs alongside their correlation with research outcomes. We further incorporate insights from 229 authors across 312 papers about resource usage and impact.

Study Design

Results

Discussion

More/Better?

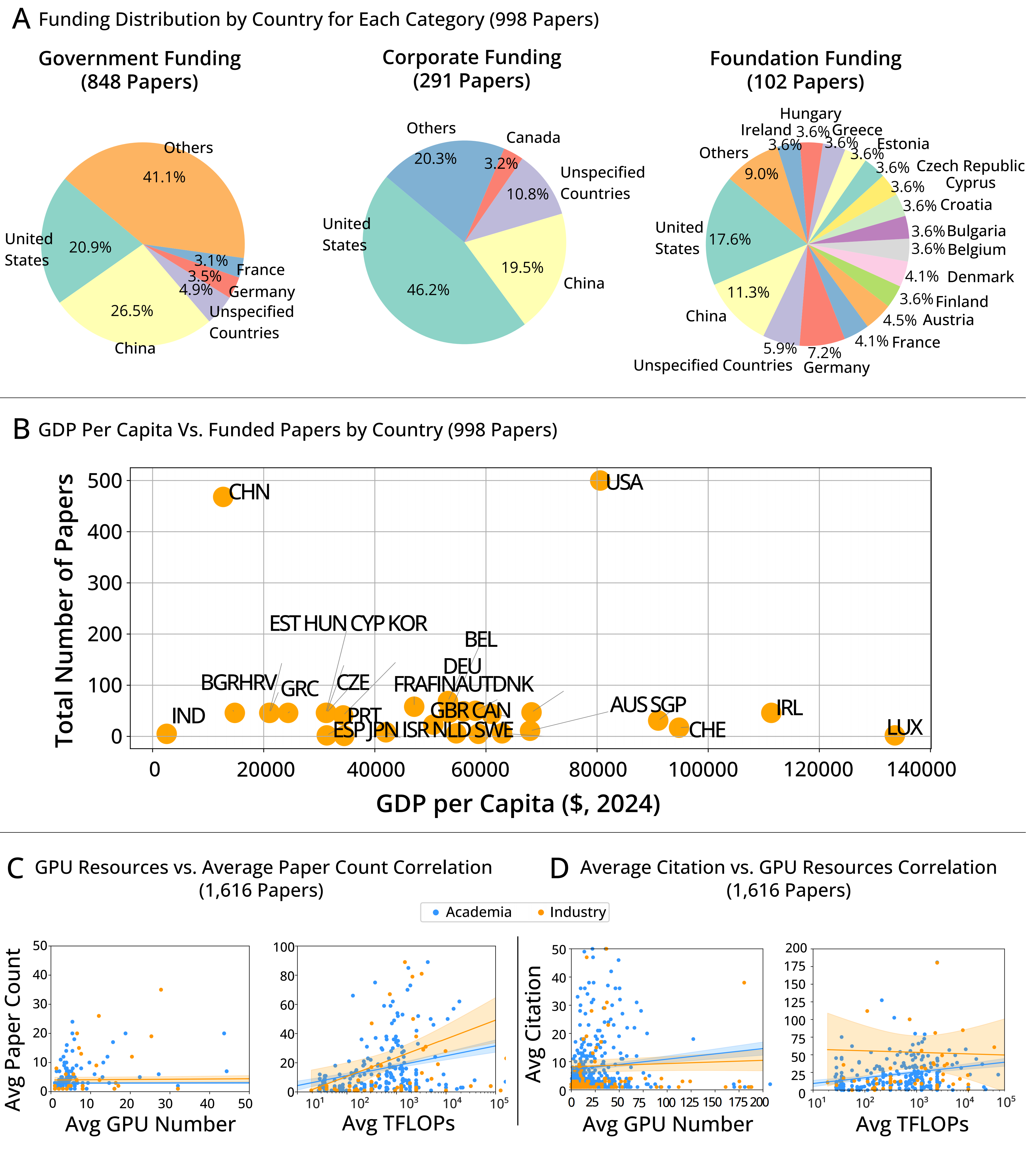

Our study provides empirical evidence that increasing the number of GPUs does not inherently lead to higher research impact. This is important as unchecked expansion of computational requirements further exacerbates environmental concerns (Schwartz et al., 2020). Furthermore, the current landscape of FM research remains highly centralized, with China and the United States disproportionately dominating the field, as access to computing resources often serves as a fundamental prerequisite for participation (Lehdonvirta, 2024).

We note that many papers utilized multiple GPUs for different tasks, making it challenging to clearly categorize GPU numbers, types, and memory configurations. Consequently, our calculated FLOPs values may differ slightly from the actual computational resources reported in these studies.

Open Reporting

While initiatives such as the required computing statement from some conferences acknowledge the role of computational resources, they remain insufficiently reported. Greater transparency in GPU usage (Bommasani et al., 2025) and recognition of computing resources, including GPU availability, storage, and human labor, are integral components in evaluating AI research. This will ensure the long-term sustainability of AI research (Maslej et al., 2025).

While we quantified GPU usage and authorship, other resource costs are often overlooked. The cost of failed experiments is rarely acknowledged; research highlights successful outcomes, yet unsuccessful attempts are crucial to the progress of FM research. Furthermore, infrastructure costs, which vary between countries due to gross domestic product (GDP) and AI policies, are generally not considered.

Automated Evaluation

We relied on GPT-4o to extract and summarize detailed information from PDF files, a method which is susceptible to inaccuracies. We performed ten rounds of GPT-4o extraction and summarized the results through majority voting to minimize errors. Nevertheless, the extracted information may still contain inaccuracies, necessitating careful interpretation and validation.

Research Team

Yuexing Hao

EECS, MIT & Cornell University

Yue Huang

CSE, University of Notre Dame

Haoran Zhang

EECS, MIT

Chenyang Zhao

CS, University of California, Los Angeles

Zhenwen Liang

CSE, University of Notre Dame

Paul Pu Liang

EECS, MIT

Yue Zhao

School of Advanced Computing, USC

Lichao Sun

CS, Lehigh University

Saleh Kalantari

Cornell University

Xiangliang Zhang

CSE, University of Notre Dame

Marzyeh Ghassemi

EECS, MIT